The Biden administration said Friday it struck a deal with some of the biggest U.S. technology companies to manage the risks posed by artificial intelligence, including ensuring users know when content is AI generated and allowing external security testing of their systems.

The White House said the commitments were being made by seven major AI companies

Amazon.com

(ticker: AMZN),

Meta Platforms

(META),

Microsoft

(MSFT) and its investee company OpenAI,

Alphabet’s

(GOOGL) Google, Inflection and Anthropic.

“These commitments, which the companies have chosen to undertake immediately, underscore three principles that must be fundamental to the future of AI—safety, security, and trust—and mark a critical step toward developing responsible AI,” the White House said in a statement.

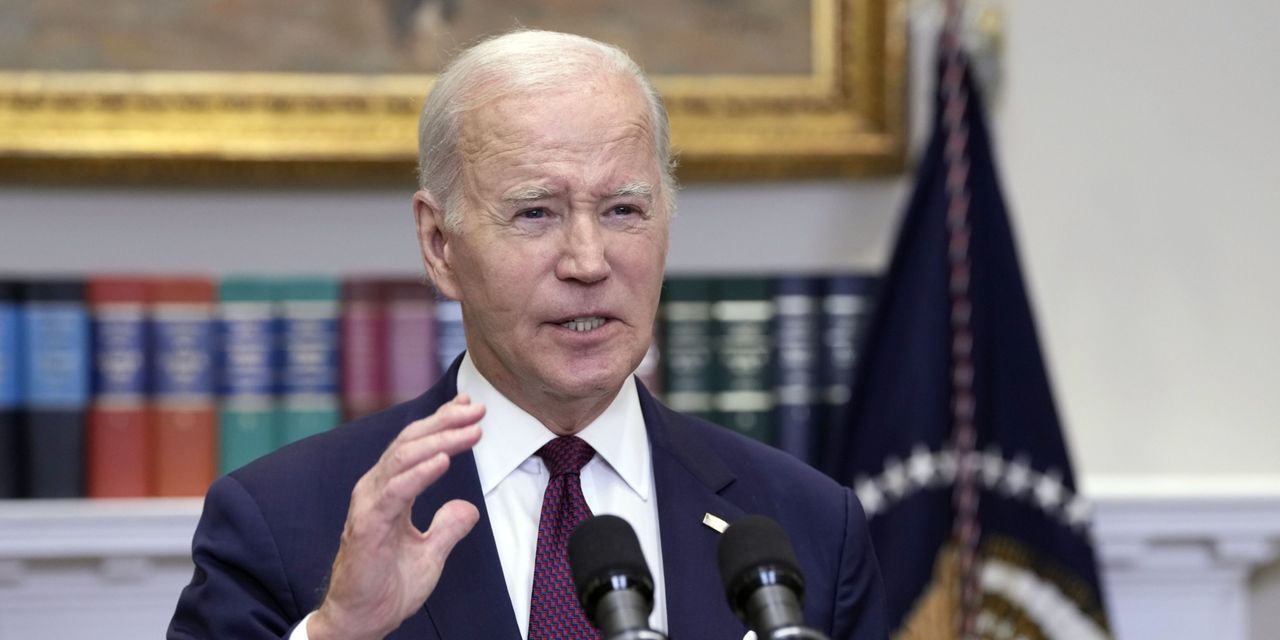

Leaders from the companies will meet with President Joe Biden on Friday, with several having already been called to the White House earlier this year.

The announcement on Friday didn’t outline how the AI safety commitments would be enforced but the White House said it was developing an executive order and will pursue bipartisan legislation on the topic.

Write to Adam Clark at [email protected]

Read the full article here