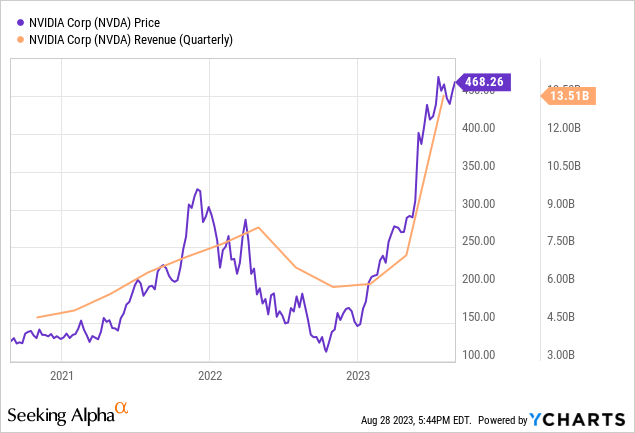

I covered Nvidia (NASDAQ:NVDA) back in April this year when I had a hold position mainly because of the competition it faced for AI chips, but, since then the stock has appreciated by 70% mostly because of upbeat chip sales figures. Hence, during the first quarter of 2024 (Q1) results reported in May, the company not only beat topline consensus but also provided guidance that was far beyond analysts’ expectations.

Revenue expectations were also exceeded in Q2 which ended in July, and for Q3 which ends in October, guidance exceeds what analysts estimate by a whopping $3.5 billion with stock buybacks of $25 billion also planned. Despite all these market-moving catalysts, the stock did not get the same lift as in May as charted below.

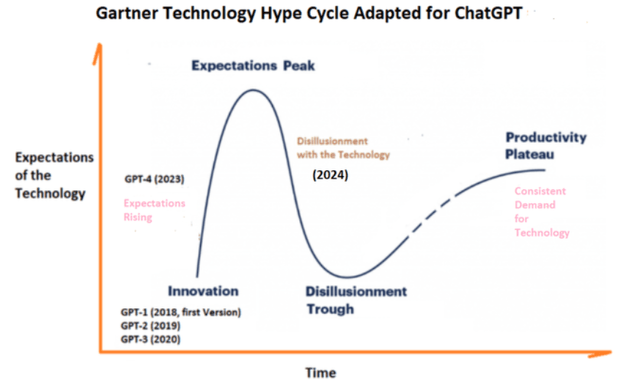

This begs the question of whether after its YTD upside of above 220%, the time has not come for a reversal as this thesis will show. I back my position by focusing on Nvidia’s main demand driver which is OpenAI’s ChatGPT while also illustrating how we may have already reached the peak of expectations by using the Gartner Technology Hype Cycle.

First, I assess the demand status.

Some Differing Views on Demand

According to Nvidia’s CEO during Q2’s earnings call, demand remains strong with major CSPs (cloud services providers or hyperscalers) like Amazon (AMZN) and Microsoft (MSFT) continuing to order. If true, then this would go against Bank of America’s (BAC) analyst Vivek Arya’s view that “the overall pie of hyperscaler spending is not growing”.

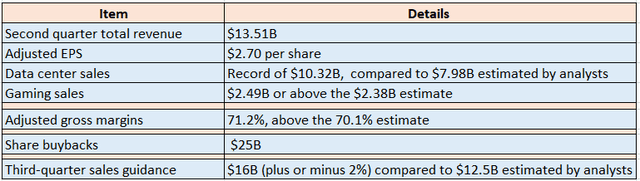

Furthermore, baked in the sales guidance of $16 billion for Q3 (as per the table below) is demand from consumer internet companies like Meta Platforms (META) and enterprises racing to deploy their own LLMs (large language models) instead of leasing services from hyperscalers. In this respect, OpenAI which is financially backed by Microsoft is not the only one demanding GPUs, there are also others developing their own LLMs financed by large inflows of venture capitalists’ money. It is also this type of demand whereby developers don’t necessarily need the chips now but are still placing orders in order not to be late which is translating into high orders for Nvidia.

Key figures (www.seekingalpha.com)

Noteworthily, now that the executives have quelled supply fears, customers are no longer likely to continue exhibiting the same FOMO (fear of missing out) mentality as before. Worst, there may be order cancellations as ChatGPT does not deliver the expected performance milestones.

In this respect, enthralled by its ease of use, millions have rushed to OpenAI’s website in order to test ChatGPT. Well, it is true that it generates intuitive reports on the fly after surfing through the tons of unstructured data available on the internet, but, there are also problems.

Inaccurate ChatGPT Results and the Hype

A survey by TECHnalysis Research covering one thousand IT decision makers who championed their corporate Generative AI efforts reveals that 99% of companies still have issues with the technology nearly six months after the latest stable version of ChatGPT (GPT-4) was launched on March 14. In addition to copyright concerns, the other top issue to be flagged pertains to “inaccuracy”, by 55% of survey participants. This is a staggeringly high number for a software tool, especially at a time when people are used to the accuracy of online searches. Also, costs have been highlighted as an issue, but given that, just one of Nvidia’s H100 is priced at around $30K, these are not likely to come down soon.

Furthermore, the launch of another version of ChatGPT to address the inaccuracy issue may be delayed by OpenAI itself facing a shortage of GPUs only two months back, which may lead more users to become disillusioned with the technology as is often the case when a lot of expectations is built. Now, to measure these, researchers at Gartner have developed a hype cycle (as shown below), which seems to indicate that the peak of expectations has already been reached.

Gartner hype cycle populated with data from techtarget.com (www.gartner.com)

Thus, there is a possibility that customers, especially from enterprises that constitute about 30% of the data center business, do not find enough value in ChatGPT to justify ROI. This can in turn lead to a pause in demand for GPUs, which are the building blocks for ChatGPT.

Coming back to the revenue projections, these also include the uptake of InfiniBand which provides better performance with large IT workloads than normal network connectivity and saw significant traction during Q2. However, as per the words of the CEO himself, InfiniBand is preferable for use cases where “the infrastructure is very large”. Now, this sort of network tends to be deployed mostly by CSPs which constitute about 60% of the data center segment revenues. On the other hand, things may be different for consumer internet and enterprises which constitute the remaining 40% as InfiniBand comes at a huge price tag.

Looking at revenue mix, Nvidia’s current growth is heavily skewed toward AI with data center sales forming 76.4% of sales in Q2 compared to 59.5% in Q1 which means that it faces a high level of revenue concentration risks, but there is also supply uncertainty.

The Supply, Competition, and Valuations

One factor that initially helped supply is that Taiwan Semiconductor Company (TSM) had spare capacity as a result of weakness in PC demand since the beginning of this year. However, there is one aspect of the semiconductor supply chain that only applies to AI and GPUs and this is CoWoS (chips on wafer on substrate) or a packaging technology that puts together the high bandwidth memory (HBM3) produced by S K Hynix, the South Korean semiconductor supplier, and Nvidia’s H100s.

According to an article by SemisAnalysis, the slack capacity has already been consumed, but, during the earnings call, the CFO mentioned that they have added additional partners to respond to demand over the next several quarters. Now, this partner could be Samsung (OTCPK:SSNLF) according to a source but the South Korean company would have to meet some quality testing first and another limiting factor could be its packaging technology not delivering the same volume production as TSMC’s CoWoS.

In the same vein, CoWoS is not exclusively reserved for Nvidia but is also used by other customers like Broadcom (AVGO), Marvell (MRVL), and especially Advanced Micro Devices (AMD) whose latest MI300x can support 192GB of RAM or more than the H100 which goes only up to 120 GB.

Therefore despite its advance, Nvidia may still face competition, especially in case CIOs do not sustain ordering as expected and instead prioritize costs, especially after having a more precise idea of their requirements. For this matter, to obtain a grasp of Nvidia’s pricing power, its gross margins in Q2 rose by 234% YoY surging to reach 71%, while revenues increased by less than half, or 101%.

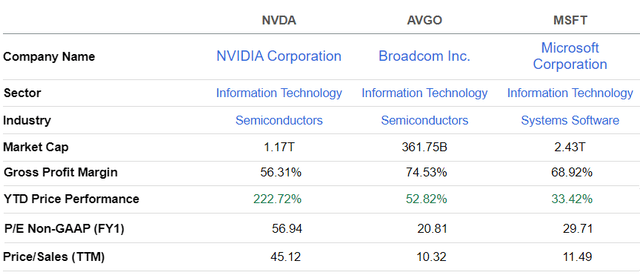

Consequently, its valuations can now be compared with Broadcom and Microsoft which operate with similar gross margins and both propose hardware and software, albeit at different degrees of their product mix. As for Nvidia, in addition to selling hardware, it also sells a lot of software as part of its data center products and proposes cloud-based AI services with its HGX H100 AI Supercomputing platform.

Comparison with AVGO and MSFT (seekingalpha.com)

In my view, Nvidia’s much higher P/S of 45.12x which is 4 to 4.5 times higher than IT peers simply cannot be justified, and the share price could drop to the $306-$314 range which it was trading before May 24, the date when it announced sales forecasts in excess of 50% of Wall Street estimates. Now, the midpoint, or $310 equals a P/S of 29.89x (45.12/468 x 310) based on the actual share price of $468.

This is still 2.5 to 3 times above peers but can be justified as, looking through the hype, Generative AI is also about improving productivity.

The Productivity Plateau and Final Target of $397

This follows research by independent research companies PricewaterhouseCoopers and McKinsey who have figured out the potential dollar amount of gains and percentage improvement in productivity respectively.

Furthermore, the Gartner Hype Cycle above also shows that a productivity plateau is to be reached in the longer term when corporate CEOs have a more realistic idea of the opportunities AI can bring to their bottom lines, but, before that, expectations will likely subside during the disillusionment trough. This period will be characterized by a high degree of volatility as adverse news about ChatGPT reaches the market, as, in addition to the inaccuracies I mentioned above, other ChatGPT shortcomings have been highlighted by Purdue University and the Federal Trade Commission also carrying out an investigation on grounds that personal data were put at risk.

Translating this progression in expectation level from the hype cycle to stock price action, after the reversal to $306-$314, the price could rise again to stabilize around $397 as the productivity plateau is reached. This figure is obtained by first subtracting $310 (midpoint of 306 and 314) from the August 24 PEAK (of $484), dividing by two, and adding the result ($87) to $310 yielding $397. This represents a 15% downside compared to the actual price of $468, and this bearish position is also supported by NVIDIA Corporation (NVDA) Momentum Performance indicators with both the 50-day and 100-day moving averages pointing to a downside.

Now, $397 comes to a P/S of 38.27x (45.12/468 x 397) which is 3x to 3.7x peers but, I believe this is a fair value as it takes into account the hype factor, the productivity potential, and the demand for AI chips, which only Nvidia can produce at scale currently. Also, the company may be benefiting from customers slashing normal cloud spending and reorienting in favor of AI.

In conclusion, my outlook is mainly based on cracks likely to appear on the demand front, some supply uncertainty, and competition, as, according to me, in addition to AMD, Intel (INTC), can still bring value to those looking for better price/quality with its Gaudi 2 GPUs following recent performance results. Along the same lines, it may appear that Nvidia’s main demand driver, namely ChatGPT may simply no “longer be the only game in town” as there are now alternatives like Meta’s LLaMA and others.

Finally, investors will note that I have refrained from calculating a lower target price based solely on an academic comparison with peers as this would have undermined Nvidia’s current monopolistic position, which, by the way, if it lasts will play against American tech innovation which has constantly lowered costs through the rapid development of competing technologies throughout the last two decades.

Read the full article here